At the core of the Solr/Lucene search engine is an inverted index. The inverted index has a list of tokens and a list of the documents that contain those tokens. In order to index text, Solr needs to break strings of text into “tokens.” In English and Western European languages spaces are used to separate words, so Solr uses whitespace to determine what is a token for indexing. In a number of languages the words are not separated by spaces. Chinese, Japanese, (the C and J in CJK), Thai, and Vietnamese are common languages that do not use white space to separate words For languages that do not use spaces, a segmentation algorithm has to be used.

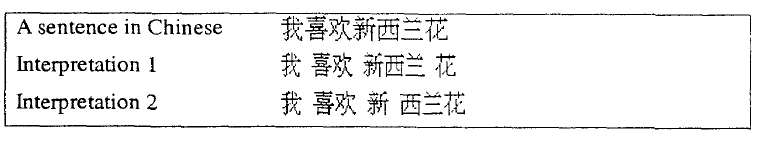

We will concentrate on Chinese word segmentation in this post. [1] Chinese word segmentation is an open problem in the research literature. Native speakers do not necessarily agree on how to segment groups of characters into words (Sproat, Shih, Gale, & Chang, 1994; Wu & Fung, 1994.[2]) The problem is that a group of characters might be segmented in different ways resulting in different meanings. There are Chinese jokes based on these ambiguities. In the example below from (Teahan, McNab, Wen, & Witten, 2000)the first segmentation means “I like New Zealand Flowers.” The second segmentation means “I like fresh broccoli”

The examples below are from (Wong et. al. 2010, p 38)

才能 as a two character word means "talent"

才 能 as two one character words means "only then able to"

学会 as a two character word means "society"

学 会 as two one character words means "learn how to"

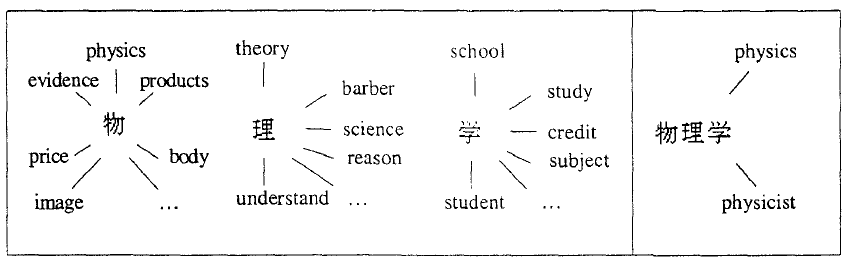

The figure below, Figure 3 from (Teahan et al., 2000) p 376., shows how the 3 character word for physics/physicist interpreted as single characters on the left could result in documents about evidence, barbers, and credit.

Segmentation approaches are generally either character-based or word-based. Segmenting into character unigrams or bigrams is computationally easy and requires no knowledge of the language (So it will work on Chinese, Japanese etc.) Word-based approaches are more accurate in terms of correct segmentation, but are computationally intensive and require dictionaries and/or a large appropriate training corpus. Dealing with ambiguities and out of vocabulary words is a problem with word based approaches (Fu, Kit, & Webster, 2008, Wong et.al 2010.)[[3]

Information retrieval research indicates that completely accurate segmentation is not necessary for decent retrieval ( Foo, S., & Li, H. 2004, Huang et.al. 2003, Kwok, 1999 ). In fact Huang et.al.( 2003) found that as segmentation accuracy increased above 70%, information retrieval performance declined. They attribute this to the fact that poor segmentation will tend to break compound words into smaller constituents. Often the smaller consituents of larger words have related meanings so this increases recall.[4]

As long as the same segmentation algorithm is used for both segmenting the query and segmenting the text for indexing, good retrieval is possible with character-based approaches.[5]Information retrieval research has generally found that simple approaches such as indexing overlapping character bigrams have comparable performance with more sophisticated word based approaches. As an example of overlapping bigrams, if the characters “ABCD” were Chinese characters the tokenizer would split them up into “AB” “BC” and “CD.”

Solr/Lucene implementation issues

Solr/Lucene offers a choice of 5 options for CJK tokenization[7]:

- StandardAnalyzer -- unigram indexing for C and J characters

- ChineseAnalyzer -- unigram indexing for C and J characters

- CJKAnalyzer -- overlapping bigram indexing for C and J characters[8]

- SmartChineseAnalyzer[9]– indexes words based on dictionary and heuristics. It only deals with Simplified Chinese. Simplified Chinese characters are used in the Peoples Republic of China, Singapore, and Malaysia. Traditional Chinese characters are used in Taiwan, Hong Kong, and Macau, and for works published in Mainland China published prior to 1949 .

- ICUTokenizer-- currently produces unigrams, but we have an issue open to change it to produce either overlapping bigrams or a combination of unigrams and bigrams.

The Solr QueryParser and CJK processing.

The Solr QueryParser had a bug which caused any string that is separated into separate tokens by the filter chain to be searched as a phrase query.[10] So until this bug was fixed, it didn’t matter which of the Analyzer/Tokenizers you used. Your Analyzer would break a string of CJK characters into unigrams, bigrams or words, but the QueryParser would then put them back together as a phrase. (See: https://issues.apache.org/jira/browse/LUCENE-2458).

While this may not seem like a problem for very short queries (1-3 characters,) for longer queries it can be a problem. For example a search for “中国对熊猫的保护”(Protection of Pandas) gets no hits when searched as a phrase in large scale search. However if it is searched as overlapping bigrams there are about 600 hits.[11]

This bug in the QueryParser is also what caused problems for us with words like “l’art” triggering a phrase search with a common word “l”. (See: http://www.hathitrust.org/blogs/large-scale-search/tuning-search-performance ). Prior to the Solr bug fix, we tried to get around the problem with a custom punctuation filter that did not split “l’art” into two tokens. However, we subsequently discovered that our custom punctuation filter caused a number of other problems.

The QueryParser bug was fixed with a configuration parameter in Solr 3.1. The fix implemented in Solr 3.1 allows setting autoGeneratePhraseQueries="false" in the fieldType definition for a field in the Solr schema.xml file. Since this parameter is set on a per-field basis and we have multiple languages in the OCR field, this affects both CJK and other languages.[12] In some Solr implementations this could be a problem depending on how other languages are tokenized. For example with the default Solr filters, “l’art” would get split into “l” and “art” and then would get searched as a Boolean query “l” AND “art” (or a Boolean query "l" OR "art" depending on your default query operator). In our situation this would lead to many false drops where the word “l” appears somewhere in a document (perhaps as someone’s initial) and the word “art” appears somewhere else in the document.

The current large scale search implementation

We discovered numerous problems with our custom “punctuation filter” which we were using to solve the problem with “l’art”. Among other things it was interacting with dirty OCR to create long strings of nonsense which added millions of nonsense “words” to our index. After some discussion with some of the Solr/Lucene committers we decided to use the ICUTokenizer. The ICUTokenizer is designed by a group of multilingual unicode experts to work well with multiple languages.[13] It is intelligent about when to remove or split on punctuation so it doesn’t split up “l’art” or “can’t” and does split “rain|snow|sleet” which is how tables sometimes show up in the OCR. The ICUTokenizer also provides segmentation for Lao, Myanmar, and Khmer, and segments Chinese and Japanese (Han and Kanji) characters into unigrams.

Unfortunately unigrams produce many false drops (Halpern, 2006. pp 65-66 ; Kwok, 1999, p 170). As an example of false drops in large scale search, a search for 大亚湾 (Daya Bay) produces over 700,000 hits when searched with the default unigram tokenization. If it is searched as overlapping bigrams there are about 5,000 hits and searched as a phrase about 4900.[14]

What we would really like to do is to use the ICUTokenizer and have C and J tokenized into both unigrams and overlapping bigrams. We need unigrams for single character queries. We need overlapping bigrams in order to overcome the false drops caused by unigrams. Until the code for the ICUTokenizer is modified to produce bigrams, we will be using unigrams. The temporary solution for the issues with unigrams is a list of suggestions to users to put Chinese “words” in quotes separated by spaces. We worked with one of the librarians in the Asia library and they put together a help page for searching HathiTrust: http://www.lib.umich.edu/node/26889.

Volunteer Java programmers needed found!

References

Abdou, S., & Savoy, J. (2006). Statistical and Comparative Evaluation of Various Indexing and Search Models. In Information Retrieval Technology (pp. 362-373).Lecture Notes in Computer Science. (4182) Springer Berlin http://dx.doi.org/10.1007/11880592_28.

Emerson, T. (2000). Segmenting chinese in unicode. 16th International Unicode Conference, Amsterdam, The Netherlands.

Feng, H., Chen, K., Deng, X., & Zheng, W. (2004). Accessor variety criteria for Chinese word extraction. Comput. Linguist., 30(1), 75-93.

Foo, S., & Li, H. (2004). Chinese word segmentation and its effect on information retrieval. Information Processing & Management, 40(1), 161-190. doi:10.1016/S0306-4573(02)00079-1

Fu, G., Kit, C., & Webster, J. J. (2008). Chinese word segmentation as morpheme-based lexical chunking. Information Sciences, 178(9), 2282-2296. doi: 10.1016/j.ins.2008.01.001.

Halpern, J. (2006). The role of lexical resources in CJK natural language processing. Proceedings of the Fifth SIGHAN Workshop on Chinese Language Processing, 64-71.

Hatcher, E., Gospodnetić, O., & McCandless, M. (2010). Lucene in action (2nd ed.). Greenwich, CT: Manning Publications.

Huang, Xiangji, Peng, Fuchun, Schuurmans, Dale, Cercone, Nick and Stephen E. Robertson (2003) Applying Machine Learning to Text Segmentation for Information Retrieval . Information Retrieval Volume 6, Numbers 3-4, 333-362, DOI: 10.1023/A:1026028229881

Kwok, K. L. (1999). Employing multiple representations for Chinese information retrieval. J. Am. Soc. Inf. Sci., 50(8), 709-723.

Luk, R. W. P., & Kwok, K. L. (2002). A comparison of Chinese document indexing strategies and retrieval models. ACM Transactions on Asian Language Information Processing (TALIP), 1(3), 225-268. doi: 10.1145/772755.772758.

Nie, J., Gao, J., Zhang, J., & Zhou, M. (2000). On the use of words and n-grams for Chinese information retrieval. In Proceedings of the fifth international workshop on on Information retrieval with Asian languages (pp. 141-148). Hong Kong, China: ACM. doi: 10.1145/355214.355235.

Nie, Jian-Yun, Ren, Fuji. (1999). Chinese information retrieval: using characters or words?. Information Processing & Management 35 (1999) p 443-462.

Peng, Fuchun, Huang, Xiangji , Schuurmans, Dale and Nick Cercone. "Investigating the Relationship of Word Segmentation Performance and Retrieval Performance in Chinese IR", Proceedings of the 19th Biennial International Conference on Computational Linguistics (COLING'02), Taipei, Taiwan, August 24-September 1, 2002.

Savoy, J. (2005). Comparative study of monolingual and multilingual search models for use with asian languages. ACM Transactions on Asian Language Information Processing (TALIP), 4(2), 163-189. doi: 10.1145/1105696.1105701.

Shi, L., Nie, J., & Bai, J. (2007). Comparing different units for query translation in Chinese cross-language information retrieval. In Proceedings of the 2nd international conference on Scalable information systems (pp. 1-9). Suzhou, China: ICST (Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering).

Sproat, R., Shih, C., Gale, W., & Chang, N. (1994). A STOCHASTIC FINITE-STATE WORD-SEGMENTATION ALGORITHM FOR CHINESE. Proceedings of the 32nd Annual Meeting of the Association for Computational Linguistics, 66-73.

Teahan, W. J., McNab, R., Wen, Y., & Witten, I. H. (2000). A compression-based algorithm for chinese word segmentation. Comput.Linguist., 26(3), 375-393.

Wong, Kam-Fai, Li, Wenjie, Xu, Ruifeng, & Zhang, Zheng-sheng. (2010). Introduction to Chinese Natural Language Processing. Princeton, NJ: Morgan & Claypool (Synthesis Lectures on Human Language Technologies, edited by Graeme Hirst, volume 4), 2010

Wu, D., & Fung, P. (1994). Improving Chinese tokenization with linguistic filters on statistical lexical acquisition. In Proceedings of the fourth conference on Applied natural language processing (pp. 180-181). Stuttgart, Germany: Association for Computational Linguistics. Retrieved August 24, 2009, from http://portal.acm.org/citation.cfm?id=974399.

Xu, Ying, Randy Goebel, Christoph Ringlstetter and Grzegorz Kondrak. Application of the Tightness Continuum Measure to Chinese Information Retrieval. Proceedings of the Workshop on Multiword Expressions MWE2010, China, Beijing, COLING 2010, pp. 55-63, 2010

Endnotes:

[1]The Japanese writing system uses four different character sets, Kanji (characters borrowed from Chinese), Hirigana, Katakana, and borrowed Latin alphabet: romanji. For our purposes, Japanese words written in Kanji have similar issues to Chinese. The ICUTokenizer uses a dictionary based tokenizer for Thai. Vietnamese separates syllables with spaces, so the default of splitting on whitespace produces something somewhat analogous to unigrams. Korean separates words with spaces but has issues similar to decompounding in European languages.

[3] According to (Wong et. al 2010, p 61) Sixty percent of word segementation errors result from unknown words. Emerson (Emerson, 2000) gives a nice overview of word-based approaches and the various problems with out of vocabulary words including transliterations of foreign words and personal names. Wong et.al (Wong et. al. 2010) provide a much more in-depth discussion, including an overview of the algorithms used in the word-based approaches as well as examples of ambiguity and unknown words. See also http://www.basistech.com/whitepapers/n-gram-vs-morphological-analysis-EN.pdf for a good explanation of n-gram vs morphological segmentation of CJK and reference to Lucene and Solr.

[4] Huang et.al. (2003, p 358) found that as segmentation accuracy increases over 70% accuracy, that retrieval scores decline. They attribute this to the fact that poor segmentation will accidentally break compound words into smaller constituents which increases recall because the smaller consituents of larger words often have related meanings. This also helps to explain why bigram indexing is competitive with more sophisticated segmentation methods and why combining unigrams and bigrams produce better retrieval results. However, what is not exlained in the literature is why this effect is not offset by a decrease in precision which would be expected when the smaller constituents do not have a meaning related to the larger word.

[5]Foo and Li (2004) did systematic experimentation combining different segmentation methods for query and indexing and found that the best results are when the same segmentation is used for both query and indexing. At least when using Chinese search engines, users do not use spaces in their Chinese language queries, see examples in from the Sogou query logs in Xu et.al. (2010.) At one time Google was providing OCR with CJK segmented with a proprietary state-of-the-art segmenter. However, because we could not write a Lucene tokenizer that would segment words the same way that Google was segmenting the OCR, we were unable to take advantage of this segmentation. Additionally, HathiTrust receives OCR from works digitized from the Internet Archive and other sources that do not use the Google segmenter.

[6]It is estimated that 63%-75% of Chinese words in use are two character words and around 5-8% are unigrams, so this partially explains the effectiveness of bigrams(Nie and Ren 1999). Huang et.al. (2003) , Peng et.al. (2002), and Kwok (1999) also explain that breaking a larger word into bigrams acts similarly to decompounding in European languages as constituents of larger words often have related meanings. There are various approaches to combining unigrams and bigrams reported in the literature and it is not immediately apparent which approaches are relatively easy to implement in Solr.

[7] See "Lucene In Action; Hatcher, Gospodnetić, & McCandless, (2010) Section 4.8.3 "Analyzing Asian Languages" for options 1-4. There is also a recent contribution of a Japanese Morphological analyzer to lucene which has a segmentation mode:https://issues.apache.org/jira/browse/LUCENE-3305 and a segmenter contribution in progress https://issues.apache.org/jira/browse/LUCENE-2522

[8]Unfortunately the CJK analyzer segments anything other than ascii into bigrams (For example Hindi/Devenagari or Cyrillic). See Robert Muir’s comment in https://issues.apache.org/jira/browse/LUCENE-2906 (Confirmed as of Solr 3.3)

[9] https://issues.apache.org/jira/browse/LUCENE-1629and https://issues.apache.org/jira/browse/SOLR-1336

[10] The bug in the Solr query parser only affects filter chains that break a token into multiple tokens with different positions. For example the synonym filter which produces multiple tokens with the same position is unaffected by this bug.

[11]This is query number C25 in the TREC 5 (Text Retrieval Conference) Chinese retrieval track. See http://trec.nist.gov/pubs/trec5/t5_proceedings.html. topics are available at http://trec.nist.gov/data/topics_noneng/topics.CH1-CH28.chinese.english.gz

[12]In the long run https://issues.apache.org/jira/browse/LUCENE-2470should allow a filter chain to do language/script specific processing.

[13] See http://unicode.org/reports/tr29/for background and http://unicode.org/cldr/utility/breaks.jsp to test the rules. Background on ICU is at: http://site.icu-project.org/

[14]In our case with large scale search this effect is made worse in that we currently index entire documents and can’t currently give documents with query words that occur closer to each other a higher score due to efficiency issues in processing our huge positions indexes. This means that if one character occurs on page one and another on page 300, the match counts the same as if the two characters were adjacent to each other. See http://en.wikipedia.org/wiki/Daya_Bay for background of Daya Bay.

[15] Although the Information Retrieval literature is pretty consistent in recommending a combination of unigrams and bigrams, exactly how they can be combined with Solr remains to be determined. If we were indexing small fields we would copy the field containing CJK and index one field as bigrams and one as unigrams and then combine them with dismax to give much more weight to matches in the bigrams.

[16]The Blacklight project and the VuFind project would also benefit from this work. The tokenizer they currently use that is part of the SolrMARC project uses an older Lucene TokenStream API and will probably not be ported to recent versions of Solr/Lucene. See: http://groups.google.com/group/blacklight-development/browse_thread/thread/eac52ff017170664/212e4e46474e96b3?lnk=gst&q=unicodenormalizationfilterfactory#212e4e46474e96b3 http://groups.google.com/group/blacklight-development/browse_thread/thread/2d9e8c5546ed1ac7/db977a4a824bb4f9?lnk=gst&q=implementers+with+script+needs#db977a4a824bb4f9